From the Principal

A few weeks ago in this column, I shared with you our strategic priorities for 2023. Eagle-eyed readers will have spotted the third point under the Digital Intelligence pillar referring to our focus on the Digital Curriculum for K-12, which includes, among other things, “training staff to teach wise use of AI.”

Artificial Intelligence (AI) is a hot topic in the media right now, with numerous news articles and LinkedIn posts commenting on the growing capabilities of AI tools and the implications this will have on society. Whether you love it, hate it or are yet to form an opinion about it, AI is here to stay. Much like the challenge posed by the onset of the internet, mobile phones and social media, which have since become embedded in our lives as well as the lives of our students, it makes no sense to ignore, ban or simply opine about AI. Our response is to do what we do best, which is teaching our students how to be informed, responsible and wise users of this new technology.

In Term 2, we will be holding a parent information evening to explain AI in more detail and to walk you through a guided tour of some of the available tools. In the meantime, it is my pleasure to handover to our Director of Innovative Learning Technologies, Anthony England, to share a top level overview of AI, the challenges it poses and the College’s strategy for growing our students’ digital citizenship.

Hello and welcome to AI 101.

AI has been part of our lives for some time now, having crept in under the guise of efficiency to convert our voices to text or automated action – yes, I’m talking about you, Siri, Alexa and Google! This is pretty much a master/servant transaction in a modern context. Nothing too controversial, other than the occasional red face when Siri/Alexa/Google mishears your command and texts your doctor instead of your mother.

Then, late last year, the step change happened. AI tools started to have the capacity to make something that didn’t exist before, namely written content, artworks, and music, at the behest of the human operator.

Unsurprisingly, the darling that’s captured the imagination of journalists is ChatGPT, a tool that makes written content. The user inputs the leading concept, story or brief and the tool comes up with the most probable next chunks of words that make sense and that follow on from the prompts. ChatGPT has been trained to make a great prediction to generate something convincing and new.

Chat = the space where you input your prompts and the tool generates new content before your eyes, much like a text message exchange.

G = generative.

P = pre-trained.

T = transformer.

But text generation is only one part of the AI puzzle. There’s also tools like Dall-E or Midjourney, both early examples of visual generation tools that are prompted by key words to spit out a piece of art that you and I would be so proud of if we spent years making it – but they do it in 30 seconds. Say hello to our four pillars depicted as bugs or creatures in the style of a children’s book illustration, generated by Midjourney.

Then there are sound generation tools such as Elevenlabs which allowed me to create an artificial version of my voice and upload a photo to D-ID to create this video clip that sounds like me, but is completely AI generated. Or I can type a request for “groovy, orchestral comedy music” into SoundDraw and it will spit out audio that never existed before but ticks all those boxes.

These AI tools are not about knowing (hello, Google), but making. The onus has shifted from ‘what do I know?’ to ‘what do I want this co-pilot tool to create with me in the driver’s seat?’ And that, my friends, is the disruption of AI and the reason why wise use of it is one of our Digitial Intelligence priorities for this year. Now that we have tools that are capable of instantaneously creating work that we used to spend a great deal of time and effort writing, designing or composing, how do we value and judge what’s nonsense and what’s real?

Whether our students are consumers of AI-created work or using AI as a co-pilot to create the work, our job as educators is to teach our young people how to discern what is important, good and true. They need to be able to judge what’s important, because there’s a great deal of information and images circulating online – and more still will come with the increased use of AI tools generating articles, posts and comments. They need to know what makes something good, better and better-still? And they need to be the detectives to work out, in a sea of misinformation, which bit to trust, which bit challenges them to investigate further, and which bit is nonsense.

AI is also an opportunity for us as educators to amplify the importance of character and ethics because we now have access to tools that can mimic. In the ‘why bother?’ world where knowing can be outsourced to technology, what happens when convenience competes against creativity and the satisfaction of mastery? How do we promote the joy of writing, creating an artwork or a musical masterpiece if AI can spit out what you want to achieve in seconds rather than years of trying, failing, tweaking, learning and growing in both skill and expertise?

It may sound daunting, but we’re confident we’ve got this. We have always taught our students to be critical thinkers, curious and creative. Our four pillars of Academic, Social, Emotional and Digital Intelligence are well placed to provide our students with the toolkit and character to successfully navigate this emerging frontier.

AI is not a darling. It is a huge challenge and an even bigger opportunity for deep learning on so many levels. Our focus will be to foster the joy of slow, sustained growth in learning and the journey to mastery. Along the way, we will ensure our students can navigate and use AI wisely as a co-pilot and, most importantly, be able to recognise what is good, important and true in the online world. – Anthony England, Director of Innovative Learning Technologies

Driving thinking forward through research

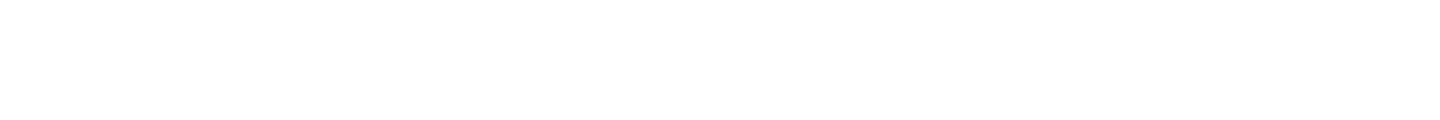

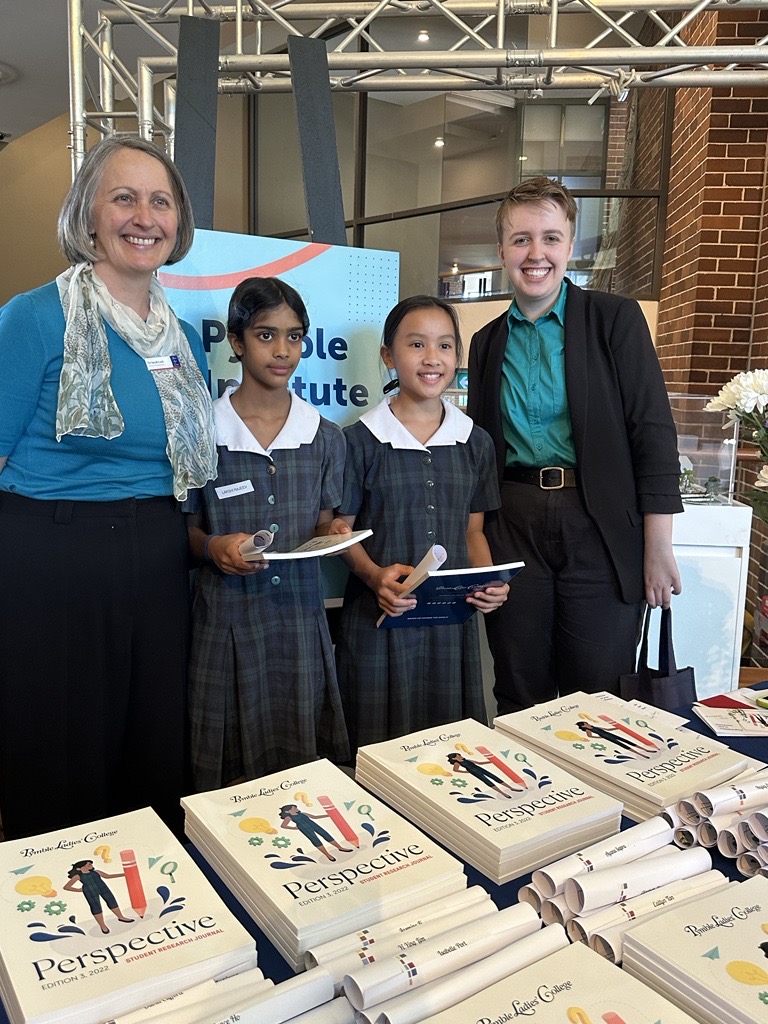

A great example of one of the myriad ways the College is equipping our students to be curious and discerning learners is our research centre, the Pymble Institute (PI). Through PI, students have the opportunity to take part in investigative projects, learn about and participate in ethics reviews, and generate their own research papers. Once a year, a selection of these student research papers is published in Perspective, which we proudly launched today in the presence of the authors, some of their parents, teachers and supporters, including our former student and now SKY TV producer, Grace Abadee (2019).

The journal includes the work of students ranging from Year 5 to Year 12 and was edited by a volunteer group of students, under the guidance of our Director of the Pymble Institute, Dr Sarah Loch. Congratulations to Dr Loch and all our students who have contributed to this seriously impressive showcase of student voice and evidence-based learning.

Opening our hearts and minds to newly arrived refugees

I know it’s not even Easter yet, but please check your diaries to see if you and your family are free to come along to The Village Championship on Sunday 7 May. You may remember this event from last year; it was instigated by one of our Year 12 students who was moved by the humanitarian crisis in Afghanistan to host an event to connect with and support newly arrived Afghan refugees in our community.

Once again, the festival will be held on the grounds of Pymble and includes music, speakers, Afghan food stalls and a friendly football match between mixed teams of Pymble students and Afghan schoolgirls. It’s a wonderful celebration of friendship, compassion and cultural diversity, made possible by open hearts, open minds and the universal language of kindness. I hope to see you there.

Dr Kate Hadwen, Principal

Dr Kate Hadwen, Principal